In the ever-evolving landscape of data-driven decision-making, the quality of data is paramount. Raw data is often messy, filled with inconsistencies, missing values, and outliers, which can compromise the integrity of analyses and machine learning models.

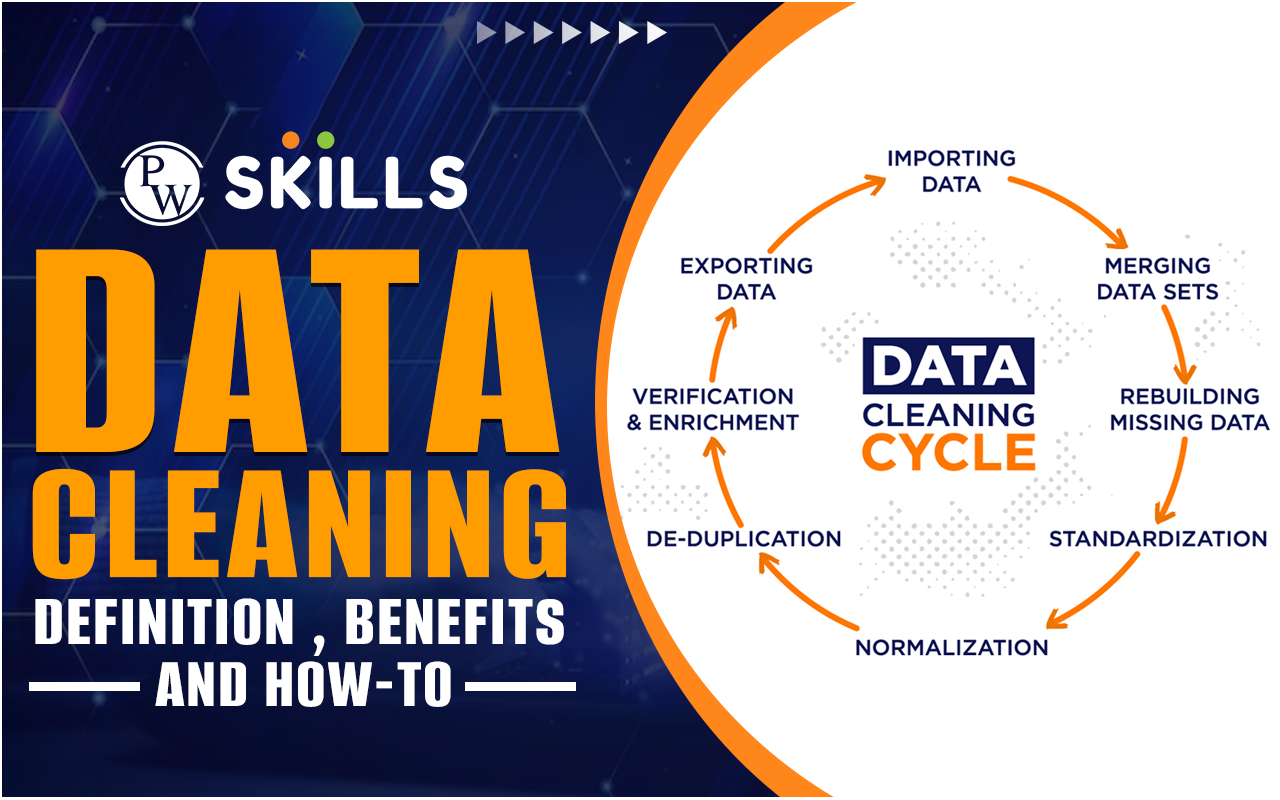

This is where data cleaning comes into play—a crucial process in the data science and analytics journey. In this blog, we’ll talk about data cleaning, its components, advantages and benefits, data cleaning in data science, data cleaning in ML, and much more!

Join the Data Science with ML 1.0 and gain the skills to transform data into actionable insights. Learn to harness the power of Python, SQL, Spark, and Hadoop, and master advanced techniques like machine learning and deep learning. Enroll now and become a data scientist who drives change!

What Is Data Cleaning?

Data cleaning, or data cleansing, is about finding and fixing errors or inconsistencies in datasets. These errors come from different places, like mistakes in data entry, system problems, or external factors. The aim of data cleaning is to improve the accuracy and reliability of data, making it good for analysis or use in machine learning.

In various sectors, from finance and healthcare to marketing and academia, having high-quality data is crucial. Wrong or incomplete data can lead to wrong insights, bad decisions, and a waste of resources. Data cleaning acts as the guardian of data integrity, ensuring that organisations can trust the information they leverage for critical operations.

Data mining, the process of discovering patterns and insights from large datasets, heavily relies on clean data. Noise and inaccuracies in the data can lead to the discovery of false patterns or trends. Data cleaning in the context of data mining involves preparing the data for analysis by addressing issues like missing values and outliers.

Also read: What is Data Quality? Dimensions, Frameworks, Standards

Data Cleaning in Python

Python is a popular choice for data cleaning, thanks to its many libraries. Libraries like Pandas, NumPy, and scikit-learn offer strong tools for handling and cleaning data. Python’s readability and flexibility make it great for both beginners and experienced data scientists working on data cleaning tasks.

Common Data Cleaning Techniques

Handling Missing Values

- Imputation methods (mean, median, mode)

- Deleting rows or columns with missing values

- Advanced imputation techniques (K-nearest neighbours, regression)

Outlier Detection and Treatment

- Visualisation techniques (box plots, scatter plots)

- Statistical methods (Z-score, IQR)

- Transformation or removal of outliers

Duplicate Data Removal

- Identifying duplicate records

- Choosing appropriate deduplication strategies

Standardising and Normalising Data

- Scaling features to a standard range

- Normalising data for consistent comparisons

Dealing with Inconsistent Data

- Addressing inconsistent formats (date formats, text casing)

- Standardising units and measures

Data Cleansing vs. Data Cleaning

The terms data cleansing and data cleaning are used interchangeably but differ subtly. Data cleaning corrects errors, while data cleansing involves duplication and transformation for superior data quality. Both processes share the goal of boosting data quality, fostering confidence in decision-making for successful outcomes. For instance, cleaning data for accuracy may involve cleansing steps to ensure consistency and eliminate duplicates.

Data Cleaning in Data Science

Data cleaning is not a one-time activity but an integral part of the data science lifecycle. It precedes exploratory data analysis (EDA) and model development, setting the stage for meaningful insights and accurate predictions. Including data cleaning as a routine step ensures that subsequent analyses are built on a solid foundation.

Data scientists rely on accurate and representative data to train models effectively. Inaccuracies or biases in the training data can lead to models that perform poorly or, worse, perpetuate unfair or discriminatory outcomes. Data cleaning is, therefore, a safeguard against the propagation of flawed patterns in machine learning models.

Data Cleaning in Machine Learning

The success of machine learning models hinges on the quality of input data. Data cleaning is a critical part of the preprocessing steps, preparing the data for training and evaluation. Common preprocessing steps include feature scaling, encoding categorical variables, and handling missing values—all of which fall under the umbrella of data cleaning.

Clean data contributes to the robustness and generalisation ability of machine learning models. Models trained on clean data are more likely to make accurate predictions on new, unseen data. Moreover, data cleaning aids in the identification and mitigation of overfitting, a common challenge in machine learning where models perform well on training data but poorly on new data.

Consider a predictive maintenance scenario where machine learning is used to anticipate equipment failures. If the training data contains inconsistent timestamps or missing sensor readings, the model’s predictions may be unreliable. Through careful data cleaning, these issues can be addressed, leading to a more effective predictive maintenance model.

Also read: Data Mining Vs Machine Learning – PW Skills

How to Clean Data: A Step-by-Step Guide

Before diving into cleaning, it’s crucial to understand the nature of the data. This involves exploring summary statistics, visualisations, and domain knowledge to identify potential issues. After you’ve done with the basics, follow these steps:

- Identifying and Handling Missing Values: Methods such as imputation or deletion are employed based on the extent of missingness and the impact on analysis or modelling.

- Detecting and Managing Outliers: Outliers can significantly impact statistical analyses and machine learning models. Visualisation and statistical methods help identify and decide on the treatment of outliers.

- Removing Duplicate Entries: Duplicate records can distort analyses and lead to biassed results. Identifying and removing duplicates ensure that each data point contributes uniquely to the analysis.

- Standardising and Normalising Features: Standardisation ensures that different features are on the same scale, while normalisation adjusts values to a standard range, preventing certain features from dominating others.

- Ensuring Consistency in Data: Addressing inconsistent formats, units, and measures ensures that the data is uniform and interpretable. Standardising these elements facilitates smoother analyses and model training.

Components of Quality Data

Here are some of the key components of quality data:

- Accuracy

Accurate data reflects the true values of the measured attributes, minimising errors and discrepancies.

- Completeness

Complete data contains all the necessary information required for the intended analysis or modelling.

- Consistency

Consistent data follows uniform formats, units, and measures, reducing ambiguity and improving comparability.

- Timeliness

Timely data is up-to-date, ensuring that analyses and models are based on relevant information.

- Relevance

Relevant data aligns with the objectives of the analysis or model, filtering out unnecessary information.

Also read: 5 Challenges of Data Analytics Every Data Analyst Faces!

Advantages and Benefits of Data Cleaning

In the ever-expanding realm of data-driven decision-making, the advantages and benefits of data cleaning are multifaceted, impacting various facets of business operations, analytics, and strategic planning.

Improved Decision-Making

- Enhanced Accuracy and Reliability: Data cleaning guarantees precise, dependable, and error-free information for decision-making. This cultivates confidence in decisions, resulting in greater success.

- Minimise Risk of Misinterpretation: Clean data reduces the risk of misinterpreting trends or drawing incorrect conclusions. Decision-makers can rely on the integrity of the data, making strategic choices with a clearer understanding of the underlying information.

- Aligned Business Strategies: Clean data aligns seamlessly with business strategies. It enables organisations to make decisions that are closely tied to their objectives, ensuring that the chosen path forward is in line with overarching goals.

Enhanced Accuracy in Analytics

- Robust Statistical Analysis: Statistical analysis heavily depend on the quality of input data. Clean data ensures that statistical procedures yield robust and meaningful results, providing a solid foundation for subsequent insights and actions.

- Accurate Predictive Modelling: For machine learning and predictive modelling, the accuracy of the model is contingent on the quality of training data. Data cleaning mitigates biases, reduces noise, and ensures that models can make accurate predictions on new, unseen data.

- Effective Pattern Recognition: Reliable data facilitates accurate pattern recognition. Whether it’s identifying market trends, consumer behaviours, or operational inefficiencies, clean data ensures that patterns discovered are reflective of real-world scenarios.

Increased Efficiency in Processes

- Streamlined Workflows: Data cleaning acts as a proactive measure, streamlining workflows by addressing data issues at the source. This minimises the need for ad-hoc corrections downstream, saving time and resources.

- Reduced Manual Intervention: Automation in data cleaning processes reduces the reliance on manual intervention. This not only accelerates the cleaning process but also minimises the risk of human error, contributing to overall operational efficiency.

- Faster Decision Cycles: Quick and reliable access to clean data accelerates decision cycles. Organisations can respond promptly to changing market conditions, emerging opportunities, or potential challenges, gaining a competitive edge.

Building Trust in Data-Driven Insights

- Stakeholder Confidence: Clean data instil confidence in stakeholders, fostering a culture where decisions are made based on data-driven insights. Stakeholders, from executives to front-line employees, are more likely to trust and act upon information derived from clean datasets.

- Transparent Reporting: Transparent reporting is a natural outcome of data cleaning. Clean datasets contribute to clear and comprehensible reports, making it easier for stakeholders to understand and interpret the presented information.

- Compliance and Accountability: In regulated industries, data cleanliness is often tied to compliance. Clean data ensures that organisations can be held accountable for their decisions and actions, meeting regulatory standards and industry best practices.

Long-Term Strategic Impact

- Data-Driven Innovation: Organisations leveraging clean data are better positioned for innovation. Clean datasets serve as a foundation for advanced analytics, artificial intelligence, and other cutting-edge technologies, unlocking new possibilities and avenues for growth.

- Adaptability to Change: Clean data makes organisations more adaptable to change. Whether facing shifts in market dynamics, technological advancements, or evolving customer preferences, the reliability of clean data allows for agile responses and strategic pivots.

- Continuous Improvement: A commitment to data cleaning reflects a dedication to continuous improvement. By regularly refining and enhancing data quality, organisations establish a culture that prioritises excellence in data management, setting the stage for sustained success.

Data Cleaning Tools and Software

Here are some of the most popular data cleaning tools:

OpenRefine

- User-friendly interface for data cleaning

- Powerful transformations and clustering capabilities

Trifacta

- AI-powered data cleaning platform

- Visual profiling and smart suggestions for cleaning steps

Pandas (Python Library)

- Widely used for data manipulation and cleaning in Python

- Comprehensive functionality for handling missing values, duplicates, and more

How to Choose the Right Tool for Your Needs?

Considerations include the scale of data, the complexity of cleaning tasks, and the integration with other tools or platforms. User-friendliness and support for automation are also crucial factors.

Also read: Essential Data Analytics Tools for Successful Analysis

Conclusion

Data cleaning is not just a technical step in the data pipeline; it’s a cornerstone of reliable and impactful data-driven insights. Cultivating a mindset of data quality from the beginning of any data-related endeavour is essential for long-term success. As data continues to grow in volume and complexity, automated and intelligent data cleaning solutions are likely to play an increasingly pivotal role in ensuring data quality. In the ever-changing realm of data science and analytics, data cleaning is a foundational process, guaranteeing accurate and actionable insights. Adopting the practice of data cleaning is not just a best practice; it’s a strategic necessity for achieving excellence in data-driven decision-making.

FAQs

How often should data cleaning be performed?

Data cleaning should be a routine part of the data management process. The frequency depends on the nature of your data and how often it is updated. Regular assessments, especially before significant analyses or model training, help maintain data integrity.

Can data cleaning be automated, and what tools support automation?

Yes, data cleaning can be automated. Tools like Trifacta and OpenRefine offer automation features, streamlining the process. Automation not only saves time but also reduces the risk of human error, ensuring consistent data quality.

Is data cleaning necessary for small datasets or simple analyses?

Yes, data cleaning is essential regardless of dataset size. Even small datasets can contain errors, missing values, or inconsistencies that can impact analyses. The principles of data cleaning are universally applicable and contribute to result accuracy.

How does data cleaning contribute to regulatory compliance?

Clean data is a cornerstone of regulatory compliance. Ensuring accuracy, completeness, and transparency through data cleaning practices helps organisations meet regulatory standards. It provides an auditable trail, demonstrating data reliability and accountability.

Can data cleaning uncover hidden patterns or insights?

Yes, data cleaning plays a pivotal role in revealing meaningful patterns. By removing noise, addressing inconsistencies, and ensuring data accuracy, the cleaned dataset becomes a more reliable foundation for discovering hidden insights that might be obscured by inaccuracies in raw data.